Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

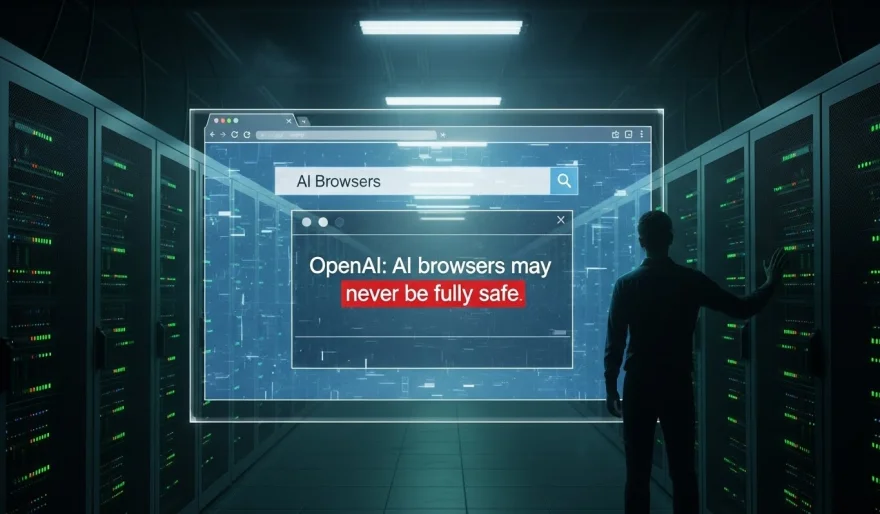

OpenAI admits the uncomfortable truth: AI browsers may never be fully safe

6 min read To counter this, the company is using an AI “attacker” to simulate and stress-test its agents, uncovering flaws faster than human red teams. But even with these defenses, prompt injections remain a persistent, long-term security challenge for AI agents on the open web. December 23, 2025 13:57

OpenAI is saying the quiet part out loud.

Even as it hardens its new Atlas AI browser, the company is acknowledging that prompt injection attacks aren’t going away — and may never be fully solved. That admission raises a bigger question for the entire industry: how safely can AI agents actually operate on the open web?

In a new blog post, OpenAI compares prompt injection to scams and social engineering — a class of problems that can be reduced, but not eliminated. The company concedes that ChatGPT Atlas’ agent mode expands the security threat surface, making the browser more powerful, but also more vulnerable.

What prompted the warning

Atlas launched in October, and it didn’t take long for security researchers to poke holes in it. Within hours, demos surfaced showing that a few lines of hidden text in Google Docs could alter the browser’s behavior.

Brave quickly followed with its own analysis, arguing that indirect prompt injection is a systemic problem across AI browsers — not just Atlas, but competitors like Perplexity’s Comet as well.

OpenAI isn’t isolated here. Earlier this month, the UK’s National Cyber Security Centre issued a similar warning: prompt injection attacks against generative AI systems “may never be totally mitigated.” Their advice was blunt — stop trying to “solve” the problem, and focus instead on reducing impact.

Why this matters

AI browsers aren’t passive tools. They read, reason, click, summarize, and act on your behalf. When that behavior can be influenced by hidden instructions buried in web pages or emails, the risk profile changes dramatically.

This isn’t about a chatbot saying something weird. It’s about agents:

-

Taking unintended actions

-

Exposing sensitive data

-

Being steered into long, multi-step harmful workflows

As AI agents become more autonomous, prompt injection becomes less like a bug and more like an attack surface.

OpenAI’s approach: fight AI with AI

OpenAI’s response isn’t denial — it’s escalation.

The company says prompt injection is a long-term security challenge, and its strategy centers on a fast, continuous defense cycle. The most interesting part? An LLM-based automated attacker.

This attacker is an AI trained via reinforcement learning to behave like a hacker. It actively looks for ways to smuggle malicious instructions into Atlas, tests them in simulation, studies how the target AI reasons about the attack, and then iterates — over and over.

Because it can see how the agent thinks internally, the bot can discover weaknesses faster than external attackers, at least in theory. OpenAI claims it has already uncovered novel attack strategies that didn’t surface during human red-teaming or external reporting.

How this compares to rivals

The broader industry is converging on a similar conclusion: there’s no silver bullet.

Anthropic and Google have both argued for layered defenses and continuous stress-testing. Google, in particular, has been pushing architectural and policy-level controls for agentic systems — guardrails baked into how agents reason and act.

OpenAI’s differentiator is speed and automation: using AI to relentlessly attack its own AI before someone else does.

The bigger picture

This is a reality check for anyone excited about autonomous AI agents.

The more capable these systems become, the harder they are to fully control — especially in the messy, adversarial environment of the open web. Prompt injection isn’t a temporary flaw; it’s a structural risk.

OpenAI isn’t claiming victory here. It’s admitting that AI security will be an ongoing arms race, not a problem with a finish line.

And for AI browsers, that may be the most honest update yet.

AI Agents

AI Agents