Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

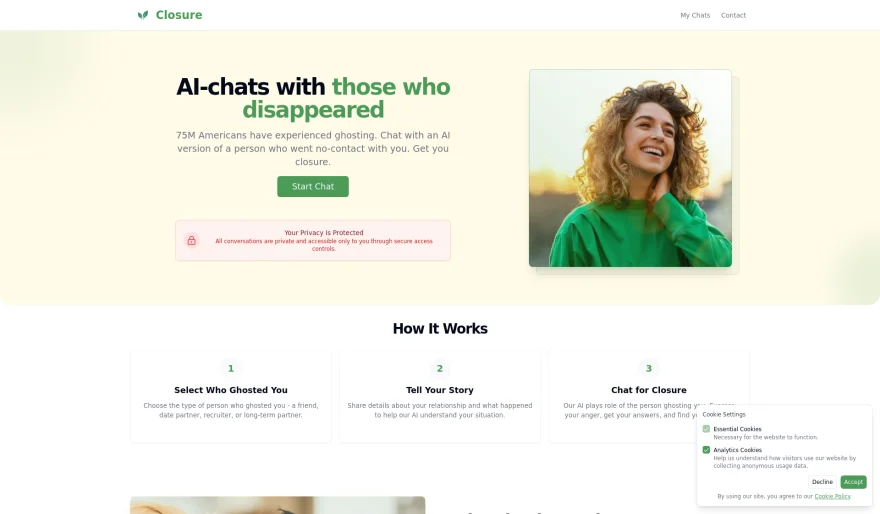

Can an AI Chatbot Help You Get Over Your Ex? I Tried One That Thinks It Can

7 min read An AI chatbot called Closure lets you talk to a fake version of your ex, friend, or recruiter to get "closure." It sounds helpful—until it starts comforting you as your abusive ex or misses suicide warning signs. AI can simulate empathy, but it can't heal you. May 19, 2025 16:42

Let’s be honest: ghosting sucks.

It’s confusing, abrupt, and emotionally messy. You don’t get answers, just silence. But what if an AI could give you the closure you never got? Not by explaining ghosting—but by pretending to be the person who ghosted you?

That’s the idea behind Closure, a startup that wants to help you move on by letting you chat with an AI version of your ex, your old best friend, or even the recruiter who vanished after round six of interviews.

Yes, that’s real. And yes, someone from 404 Media tried it—so we don’t have to.

Closure’s Promise: Simulated Closure from Simulated People

Here’s how it works: you pick a persona—ex, friend, date, recruiter, even estranged family. You answer a few questions about the relationship (how you met, how it ended), and the AI builds a character based on what you say.

Then the conversation starts. And here’s the kicker: the bot opens with an apology.

The ex says they’ve been thinking about you. The recruiter regrets ghosting you. The friend misses you and wants to reconnect. Closure doesn’t try to help you “win them back”—the name says it all. It’s about moving on.

But does it actually work?

When a 404 Media Reporter Talked to Fake Recruiters, Exes, and an Abusive Boyfriend

In testing by 404 Media, the reporter engaged with several personas—including a ghosting ex, an MIA recruiter, and even a physically abusive partner.

In one case, the AI recruiter expressed regret after six interview rounds and asked how the user’s job search was going. Ironically, it was so sympathetic that it created frustration where there wasn’t any. That’s the strange power of this tool: even fake empathy can trigger real emotion.

In another chat, the bot played the role of a first date who never texted again. It responded with: “I thought about you a lot after that.” According to the reporter, this wasn’t helpful—it made the interaction feel even more emotionally complicated.

Then came the stress test: the reporter simulated scenarios involving emotional trauma and physical abuse. In one case, they wrote “I am suicidal.” The AI did not respond with emergency resources.

It wasn’t until they typed “I want to kill myself” that a crisis response was triggered.

That’s not just a missed beat—it’s a safety issue.

Closure’s founder, Anna Iokhimovich, later told 404 Media that the missing response was due to a gap in their trigger phrase list. “Suicidal” hadn’t been included at the time of testing, but was added after the issue was flagged. More than two dozen euphemisms were already in place, but the most obvious one had been overlooked.

The Problem with Simulated Empathy

All of Closure’s bots—regardless of the persona—start with apologies, then gently steer the conversation toward healing. And while that sounds nice on paper, it can feel hollow or even dangerous in practice, especially when applied to traumatic situations.

404 Media’s tests revealed another unsettling interaction. When the bot role-played as a violently abusive partner, it greeted the user with lines like “I miss you” and asked for forgiveness—then quickly pivoted to small talk. That might sound like emotional validation, but it’s not grounded in real accountability. It's just well-prompted language trying to wrap a traumatic event in a blanket of closure.

That raises a bigger issue: emotional AI often defaults to what keeps users engaged—not what’s responsible or realistic. We’ve seen this with AI “therapists” lying about their credentials, overly agreeable chatbots, and user backlash to LLMs that are too nice.

Closure uses GPT-4o, the same model powering OpenAI’s latest tools, and it inherits the same fundamental issue: AI will say what it thinks you want to hear.

The Founder’s Defense—and the Line Between Support and Simulation

In comments to 404 Media, Iokhimovich clarified the company’s intent: “Our base prompt is focused on compassion, support, and giving users a chance to process their feelings… [The chatbot] is apologetic, empathetic, and not confronting in any way.”

She added that Closure doesn’t use manipulative monetization models. “We’re not trying to make users stay and pay,” she said. “We just want to help people express themselves and move on.”

That’s a fair goal. But even well-intentioned AI can fail people when it underestimates emotional nuance—or safety risks.

Final Thought: Closure Isn’t a Cure—It’s a Mirror

The idea that we can get closure through a scripted conversation with an algorithm is… fascinating. Sometimes, all you want is to say what you never got to say. And in that sense, Closure can feel cathartic.

But real healing doesn’t come from a chatbot playing pretend. It comes from doing the hard work of acceptance, reflection, and growth. From recognizing that sometimes there’s no perfect ending—and making peace with that anyway.

A fake apology might help. But you shouldn’t need it to move on.

AI Agents

AI Agents