Stay Ahead of the Curve

Latest AI news, expert analysis, bold opinions, and key trends — delivered to your inbox.

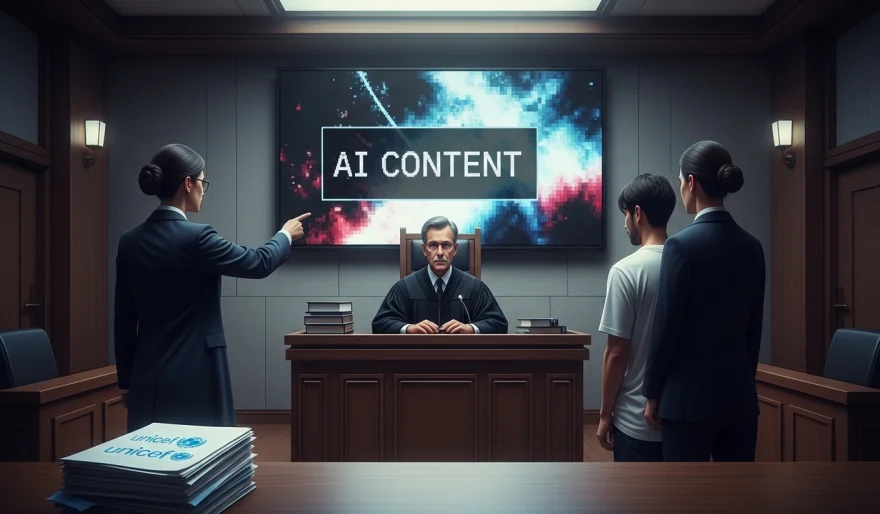

AI Just Crossed a Red Line — UNICEF Wants It Criminalized

3 min read UNICEF is calling for the criminalization of AI-generated child abuse content — warning that generative AI is making it easier to create fake but harmful images of children. February 05, 2026 13:22

AI-generated child abuse content is no longer a “future risk.”

It’s happening now — and UNICEF wants governments to treat it as a serious crime.

The UN children’s agency is calling for the global criminalization of AI content that depicts child sexual abuse, warning that generative AI tools are making it easier, faster, and cheaper to create fake but harmful imagery of children.

Unlike traditional abuse material, these images can be produced without physical victims — but the psychological, legal, and societal damage is just as real.

What’s Actually Changing?

AI models can now:

-

Generate hyper-realistic fake images and videos.

-

Mimic real children using minimal data.

-

Evade existing laws that were written before generative AI existed.

That means many countries still don’t have clear laws to prosecute AI-generated child abuse content.

Why This Matters (Big Picture)

This is a turning point for AI regulation.

For years, AI policy debates focused on:

-

Jobs

-

Copyright

-

Misinformation

Now, the conversation is shifting to something darker:

AI as a tool for serious crime.

If governments act on UNICEF’s call, it could:

-

Force stricter rules on AI model training and deployment.

-

Push companies like OpenAI, Anthropic, Meta, and Google to tighten safeguards.

-

Accelerate global AI laws faster than tech companies expected.

The Uncomfortable Truth

AI isn’t just disrupting industries anymore.

It’s challenging the limits of law, ethics, and human safety.

And this time, the world can’t afford to move slowly.

AI Agents

AI Agents